Appendix 10 The Hadoop Ecosystem in a nutshell

http://www.sagarjain.com/the-hadoop-ecosystem-in-a-nutshell/

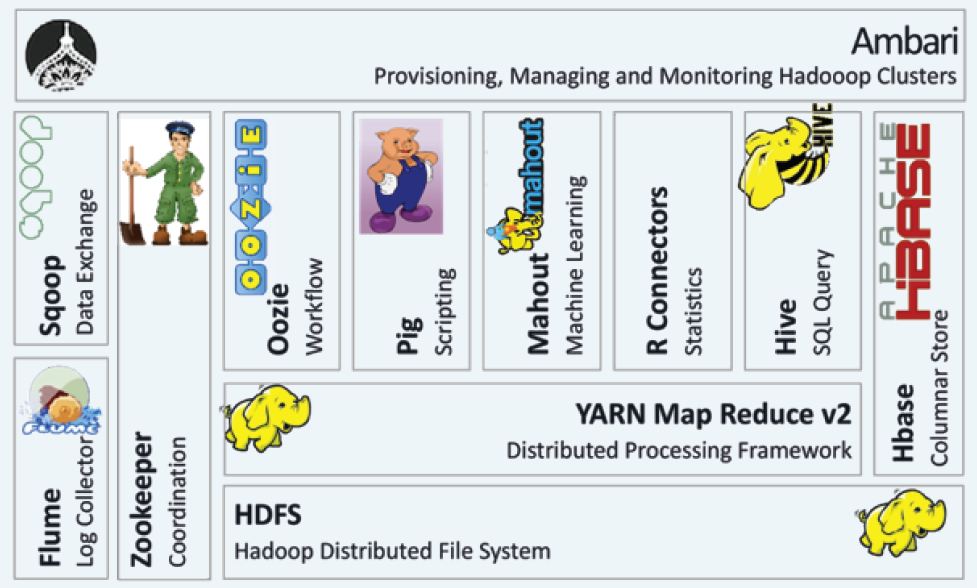

The picture below shows the Hadoop Ecosystem and its components. Lets briefly talks what these components means.

HDFS: Hadoop Distributed File System as name suggest is a distributed file system which can be installed on commodity servers. HDFS offers a way to store large data files on numerous machines and is designed to be fault tolerant due its data replication feature. Learn more on HDFS at Apache

YARN: Yet Another Resource Negotiator aka MapReduce V2. Its a framework for job scheduling and managing resources on cluster. Learn more on HDFS at Apache

Flume: Its a tool or service that is used for aggregating, collecting and moving large amount of log data in and out of Hadoop. More information can be found here.

Zookeeper: Its a framework that enables highly reliable distributed coordination of nodes in the cluster. Check this interesting video on Zookeeper.

Sqoop: “SQL-to-Hadoop” or Sqoop is a tool for efficient transfer between Hadoop and structured data sources i.e Relational Database or other Hadoop data stores, e.g. Hive or HBase. Explore more on sqoop here.

Oozie: Workflow scheduler system to manage Hadoop jobs. The jobs may include non MapReduce jobs. Check out more.

Pig: Initially developed at Yahoo!, Pig is a framework consisting of high level scripting language i.e Pig Latin along with a run time environment to which allows user to run MapReduce on hadoop cluster. Refer link for more on Pig.

Mahout: Mahout is a scalable machine learning and data mining library. Check this video on Mahout and Machine Learning.

R Connectors: R Connectors are used for generating statistics of the nodes in a cluster. More on Oracle R Connectors.

Hive: Hive is an open-source data warehouse system for querying and analyzing large datasets stored in Hadoop files. More on Hive at Apache.

HBase: Its a column-oriented non-rational database management system that runs on top of HDFS. A 3 min video on Hbase.

Amabri: This component of Hadoop ecosystem is used for provisioning, managing and monitoring the hadoop cluster. Check this link for more information.